Dave Mark raises some interesting questions about artificial intelligence in games over at

AIGameDev.com. First, he explains that although we're seeing more and better

AI in games, a common complaint heard from gamers runs along the lines of "why can't they combine such and such AI feature from game X in game Y." Then, Dave poses the questions for developers to answer:

We can only cite limited technological resources for so long.

...

Perhaps, from a non-business standpoint... that of simply an AI developer, we should be asking ourselves what the challenges are in bringing all the top AI techniques together into the massive game environments that are so en vogue. What is the bottleneck? Is it money? Time? Hardware? Technology? Unwillingness? Unimaginativeness? A belief that those features are not wanted by the gamer? Or is it simply fear on the part of AI programmers to undertake those steps necessary to put that much life into such a massive world?

Let me first admit that I'd wager Dave Mark knows a lot more about this stuff than me.

That's how he makes a living, after all.

My experience in developing game AI comes from choose your-own-adventure text-based games as a kid (where the algorithm was very deterministic, with few options), making villagers walk around in Jamaicanmon!,

and making spaceships run away from you instead of seeking you out in Nebulus: Ozone Riders.

I even asked

Glitch, Wes, and Jonathan (teammates on the project) to remind me of some simple

vector math and draw it out on the wet erase board for Nebulus. And I still made them go the wrong direction (which ended up being pretty cool, actually).

In other words, I haven't had much experience with AI as it's typically implemented in games, and what little experience I

have had is limited to things I wouldn't (personally) classify as AI to begin with.

Still, I have had some experience in what I might call "classical" AI (perhaps "academic" is a better term).

Stuart Russell and Peter Norvig wrote the Microsoft of

books on Artificial Intelligence (

90% market share for AI textbooks), and I've read through a fair bit of it. I've implemented a couple of algorithms, but mostly I've just been introduced to concepts and skimmed the algorithms. In addition, I've been through Ethem Alpaydin's book,

Introduction to Machine Learning, which would have comparatively fewer ideas applicable to games.

I guess what I'm trying to say is: although I have some knowledge of AI, consider the fact that Dave's experience dwarfs

my own when I disagree with him here: It is precisely the fact that we don't have enough processing power that gets in the way of more realistic AI in our games. Or, put more accurately,

the problems we're trying to solve are intractable, and we've yet to find ways to fake all of them.

I'm not convinced you can separate the failures of AI from the intractability of the problems, or the inability to design and implement a machine to run

nondeterministic algorithms for those problems in

NP.

Compared to deciding how to act in

life, deciding how to act in Chess is infinitely more simple: there are a finite set of states, and if you had the time, you could plot each of the states and decide which move gets you the "closest" (for some definition of close) to where you'd like to be N moves from the decision point. Ideally N would get you to the end, but even for Chess, our ability is limited to look ahead only a small number of moves. Luckily for

Deep Blue (and others), it turns out that's enough to beat the best humans in the world.

Even though Chess is a complex problem whose number of game states prevent us from modeling the entire thing and deciding it, we can cheat and model fewer states - when we can make an informed decision that a particular path of the decision tree will not be followed, we can forgo computation of those nodes. Still yet, the problem will be huge.

There are other ways of "faking" answers to the AI problems that face us in development.

Approximation algorithms can get us close to the optimal solutions - but not necessarily all the way there. In these cases, we might notice the computer doing stupid things. We can tweak it - and we can make special case after special case when we notice undesired behavior. But we are limited in the balance between human-like and rational. Humans are not rational, but our programs (often) are made to be.

Presumably, they give the policeman in

GTA 4 a series of inputs and a decision mechanism, and he's thinking purely rationally: so sometimes the most rational thing for him to do based on the decision model we gave him is to run around the front of the car when he's being shot at. Sometimes he jumps off buildings. (Video via

Sharing the Sandbox: Can We Improve on GTA's Playmates?)

It may not be smart, but it is rational given the model we programmed.

You can make the decision model more complex, or you can program special cases. At some point, development time runs dry or computational complexity gets too high. Either way,

game AI sucks because the problems we're trying to solve have huge lower bounds for time and space complexity, and that requires us to hack around it given the time and equipment we have available. The problems usually win that battle.

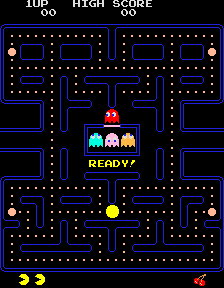

Game AI has come a long way since

Pacman's cologne (or maybe he just stunk), and it will get better, especially as we move more gaming super-powerful servers. Still, it's far from ideal at the moment.

What do you have to say? (Especially the gamer geeks: programmers

or players)

Hey! Why don't you make your life easier and subscribe to the full post

or short blurb RSS feed? I'm so confident you'll love my smelly pasta plate

wisdom that I'm offering a no-strings-attached, lifetime money back guarantee!

Leave a comment

Currently developing in Unreal Engine and they use a behavioral tree. It is totally convulted and I ended up just programming my own ai and it is a fascinating subject. The core of any ai is branch logic.

The newest greatest ai is now weight or score based. If something recieves a higher score then fo that.

Posted by John

on Nov 24, 2018 at 10:39 AM UTC - 6 hrs

Leave a comment